Image Recognition Experiment: Finding Furniture & Appliances in Kitchen Photos

The task of objects identification in an image is becoming more and more demanded in various industries. With the help of modern gadgets and smartphones equipped with cameras, image recognition is used now both for industrial and consumer applications. Image recognition process involves low-level feature extraction to locate lines, regions or areas with specific textures. During this process, many problems can arise due to a different view of an object from different angles, occlusion of different parts of the objects in different images, shadows, and background mixing with the features etc. Humans perform these tasks almost unconsciously, however computers require high-quality programming and lots of processing power to recognize objects accurately.

Recently, our engineers received an interesting task: we had to investigate whether it is possible to process images with different types of kitchen appliances and furniture in them and create a list of objects present in these pictures. This kind of image recognition task is usually called image annotation. Such a ready-to-use software solution, especially one that will provide a high production-level accuracy, is unlikely to be found on the market today, however, our specialists accepted the challenge and successfully delivered the Proof of Concept.

First of all, we wanted to find out how much time this task will take and what methodologies we should apply.

A moderate dataset of about 300 images per each of five object classes was created (the photos were collected from the Internet). Here’s the list of objects classes with sample images:

1. Gas stovetop

2. Granite countertop

3. Hardwood flooring

4. Kitchen island

5. Stainless steel refrigerator

Since we were limited in time and budget, we decided to approach the image annotation task by using more classic methods: we used DenseSIFTs and Fisher Vectors retaining some spatial information. In total, we tested five different experimentation approaches, all of which proved to give fair results (70-80% accuracy) in quite a short period of time – the whole setup and experimentation process took just about one day of work!

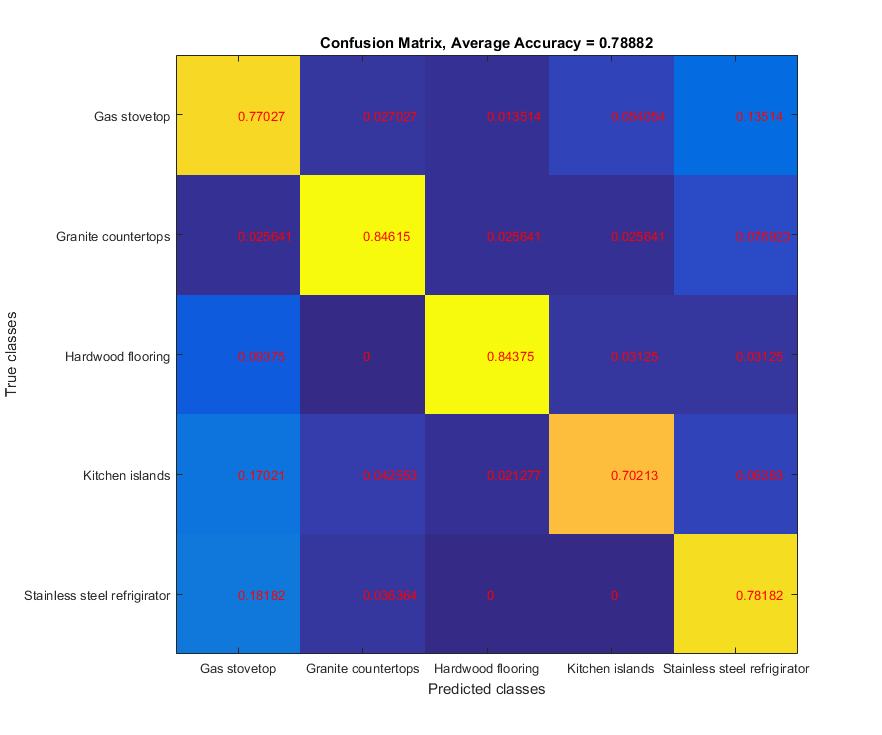

Despite the substantial difference in objects classes almost 80% accuracy was achieved for every class. The best results were achieved with granite countertops and hardwood flooring recognition (which have large areas with very distinctive patterns); kitchen islands appeared to be the most challenging objects to recognize (since the images with kitchen islands usually are more ‘panoramic’ and include a lot of other classes objects). Confusion matrix below illustrates results of the most accurate experiment.

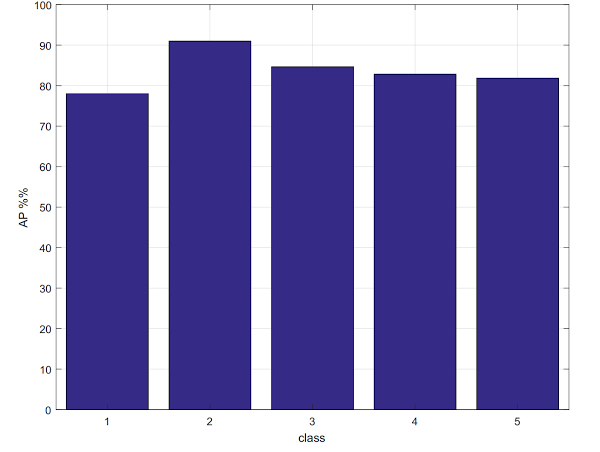

And here’s the mean average precision (mAP) diagram for the same experiment:

We think these results can be improved using modern Deep Learning-based techniques (most probably convolutional neural networks). We assume that accuracy improvement of further 5-10% seems rather realistic. Should we have another opportunity to test these approaches, we’ll be eager to share the results.