DevOps for FinTech: Build Secure & Scalable Microservices Architecture with Kubernetes, AWS – Part 1

This is Part 1 from the series of posts dedicated to Abto DevOps engineers’ experience with building secure, reliable and scalable microservices-based architecture – in this case, for the FinTech industry.

Introduction

The idea of the project was to build an Artificial Intelligence-based customer service automation solution for the client that works in the fintech domain. The project processes a big amount of sensitive data, which is why the security aspect was very important during project development and further support. The main goal is to process data from a few third-parties and store it to the other remote service. It adds additional difficulties to the task of building a secure and reliable communication between all the involved sides.

Project requirements overview

We faced the following key requirements for the DevOps team:

- system should run code written in several different programming languages;

- system should be able to securely connect to external back-office software systems;

- the system should automatically scale to be able to handle increasing loads;

- a big emphasis on the whole project’s security:

- application-level security;

- request filtering;

- secured development and production environments;

- secured communication;

- patch management;

- continuous security monitoring.

- development environment set up and application deployment should be as automated and easy as possible.

Based on that, we decided to base our architecture on the following:

- microservice architecture;

- each service should be isolated in a docker container;

- container are run using a containers orchestration tool (Kubernetes);

- the system will be deployed on AWS cloud.

High-level work overview

Each microservice is the smallest block of the system furthermore it serves the single feature only. To isolate it we packed all the microservices into separate Docker containers. It provides an ability to have a microservice’s template called Docker image and deploy as many instances as we need. Images are stored in Docker Container Registry that must be accessible for developers and/or CI/CD tools to deploy new images, and from the machines where docker instances are going to be hosted to download the required image.

The microservice can have a different loading at different periods or the whole system state. That is why each microservice must be scalable independently, which requires it to be stateless – do not store any data inside the container. The best way to overcome it is to use some central storage or database that is accessible for all the instances of the microservice at the same time if needed.

We can make as many microservices as we need to prepare a complex solution that covers all project functional requirements. The only obligation is to follow the architecture.

To manage it we selected the next toolset provided by Amazon Web Services (AWS):

- AWS Elastic Container Registry (ECR) – Docker container registry provides an ability to store our microservices’ docker images. It is accessible for developers, CI/CD tools, and Kubernetes cluster that gets images to run the container instances based on it.

- AWS Elastic Kubernetes Service (EKS) – management Kubernetes cluster service with high integration with AWS services. The base cluster includes master nodes only – the ones that are used for cluster management and do not run any workloads. Worker nodes should be requested additionally in the desired amount. They can be added or removed automatically – it is a way how we scale the cluster.

- AWS Application Load Balancer (ALB) – high available powerful load balancer service that provides advanced filtering and routing options because it works on the seventh level of the OSI model. It supports integration with additional security tools like AWS Web Application Firewall, etc.

- AWS CloudWatch – monitoring and logging service. Provides an ability to gather the systems metrics and inform about the anomalies based on thresholds.

- AWS Elasticsearch – AWS managed Elasticsearch service with Kibana integrated. All the microservices’ logs are exported to Elasticsearch and visualized by Kibana.

- Prometheus/Grafana – monitoring tools that can gather, organize, parse, and visualize a big variety of metrics.

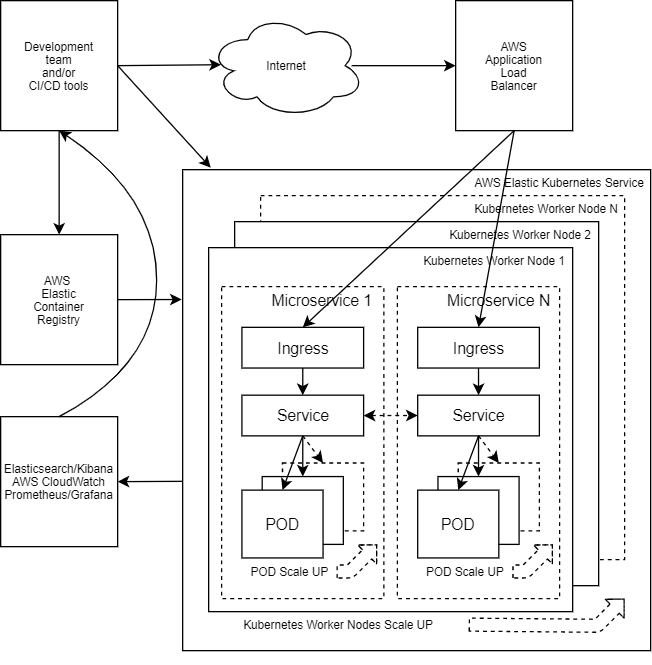

In the picture below you can find the high-level scheme of how it works altogether:

The Kubernetes cluster is deployed based on AWS EKS with a few worker nodes. It has all the required things configured for adding new nodes in the case of the lack of resources. It is ready to deploy the microservices.

The microservice’s code is packed into the Docker container, the Docker image of which is built and uploaded to AWS ECR.

Microservice’s Kubernetes deploy configuration described in YAML format. It includes at least three main Kubernetes objects:

- POD – the smallest deployable computing unit that can be managed in Kubernetes. It may include one or a few containers. In our case, it is wrapped by a high-level object called Deployment which provides us with additional benefits. POD is an instance of our microservice. Docker container is placed in the POD, it may consist of one or a few containers. So, we add additional PODs when our microservice is under high loading. Each POD has resources requirements. In the case of a lack of resources on worker nodes to run the new POD, the new POD receives a PENDING state, which triggers the adding of a new worker node.

- Service – Kubernetes internal object that points to all PODs (to the whole Deployment) of the microservice. It is like an entry point or load balancer inside the cluster.

- Ingress – Kubernetes object that provides an ability to connect to the internal service object from outside of the cluster. In other words, it is a reverse proxy for cluster workload. Microservices that do not need incoming connections from the World do not need Ingress Kubernetes object. The service object is enough for inside cluster communication.

On the deploy command, Kubernetes workers create required objects. On the POD creation, the worker node downloads the configured Docker image from AWS ECR and starts running it.

AWS ALB is deployed automatically on the microservice deployment. It is automatically bound to the appropriate Kubernetes Ingress object that is pointed to the correct Service object for delivering traffic to the correct POD(s).

In the case of POD in PENDING state (lack of the resources) the cluster must be scaled up, as well as scaled down on worker nodes’ idle. Cluster Autoscaler continuously monitors cluster resources and PODs’ state to make sure that the cluster has a proper capacity.

AWS CloudWatch continuously monitors the systems, collects logs, and sends alerts on previously configured alert rules.

AWS Elasticsearch receives logs from all the microservices. Kibana provides an ability to review and visualize it.

Prometheus collects all the exported metrics from Kubernetes and additional services. Grafana provides useful dashboards for visualization of any metric stored in the Prometheus.

Secure communication

It is important to have a few fully configured absolutely the same hosting platforms called hosting environments. All of them will be used for special needs from quick tests until the production hosting.

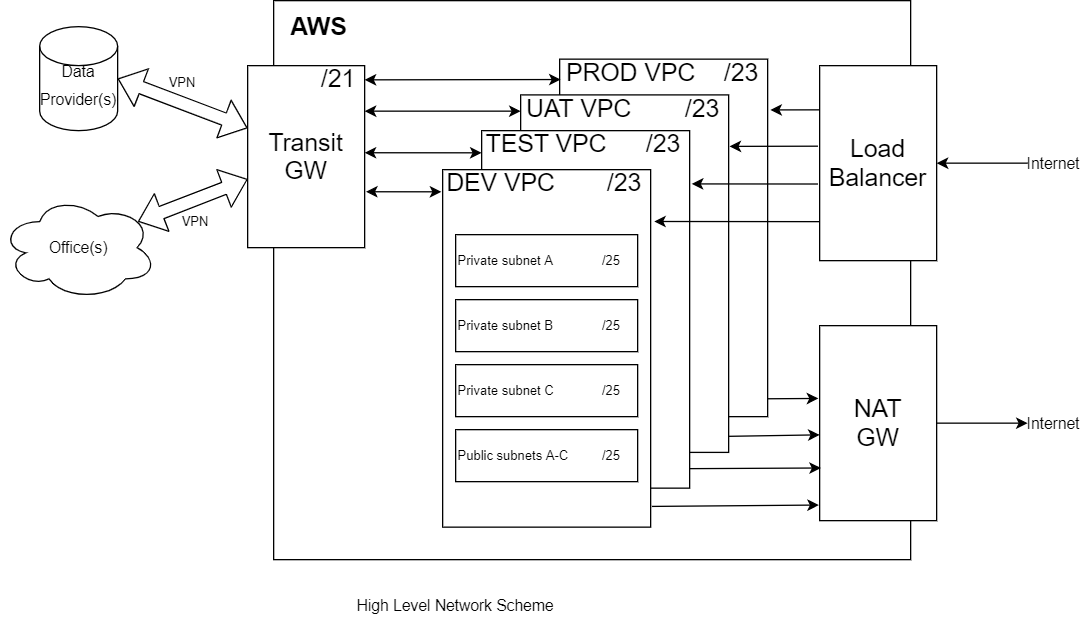

Each environment is isolated to avoid unwanted cross-environment and remote services access. The development team members can access each of them with the required permissions only. Connections to the data providers and offices are encrypted via IPSec VPN tunnels and limited based on data provider, office, environment, or developer role. Each communication site has its subnet that makes all the system more transparent and allows us to easily manage all the access permissions.

The environments are placed in separate Virtual Private Clouds (VPC) for isolation. All VPCs are connected to the Transit Gateway prepared for IPSec VPN tunnels serving.

AWS VPC default network subnet is big (/16). It does not fit our needs, because we have to build a few IPSec tunnels in the offices’ and data providers’ directions. We need two-way communication and won’t use any tricks like a NAT. Therefore, we have to switch the VPC subnets to small ones that fit our needs and do not cross with remote encryption domains. We use one /21 network that includes our four environments with their own /23 subnets. Each /23 divided into four /25 networks, three of them used for workloads in three availability zones, and the last one for the rest needs like public access, etcetera.

For security reasons world accessible IPs binding for the subnets with the workload is disabled. It also disables Internet access, and when we need it, we use the 4th subnet and NAT gateway.

Networking High-level scheme:

Logging

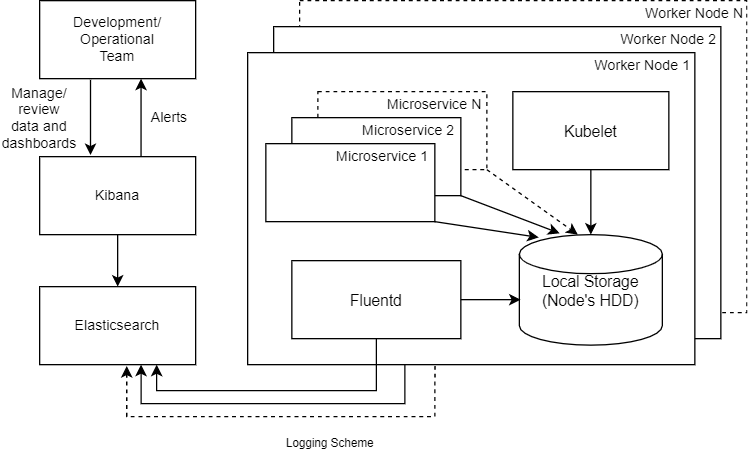

The usage of the Kubernetes cluster supposes that it has at least a few nodes. Multiply microservices are deployed and each of them can have lots of instances. As a result, we have a lot of applications that handle users’ or internal requests and generate some diagnostic output. But how to read this data? How to find the node or microservice instance that processed the request? The answer is to collect all the log entries in a single place for further processing. The Elastic Stack is a powerful toolset that helps us to solve the logging issue.

Elasticsearch, FluentD, Kibana (EFK) – is a multi-component system that can filter, sort, organize, analyze, and visualize all the collected data. The whole data flow has the next look:

- Microservice instance (Docker container instance) generates output to CLI that is stored on the disk of each node. Kubelet (in simple words – Kubernetes worker node agent) also stores its logs on the disk. The same behavior on all the nodes and all the instances of all the microservices.

- Fluentd – data collection daemon that collects, filters, and sends the data to the data store for further processing – it reads all the produced data and puts it to Elasticsearch after some pre-processing.

- It was deployed on each worker node as a DaemonSet (it guarantees that a single instance will be deployed on each Kubernetes worker node).

- Elasticsearch – a powerful full-text search engine – provides storage and search functionality for data review and analysis purposes. It receives all the data from Fluentd instances and stores it for further usage.

- Kibana – the user interface for working with data stored in the Elasticsearch – it provides an ability to review, visualize, parse for alert, etc.

We selected Fluentd for data collection and it’s delivery to Elasticsearch. There are a few similar tools that can be more useful for other projects. The most popular: Logstash and Beats.

All the logging things described above are the high-level application logs and do not cover the security, hardware, etc log entries generated by AWS services and/or third-parties. At least AWS CloudWatch and AWS CloudTrail logs should be processed and forwarded to Elasticsearch to build a unified centralized logs processing system that simplifies day-to-day management and support.

In Part 2 of the series we’ll go through the following aspects of the architecture:

- Application configuration

- Environment-related Deployment Tools

- HELM chart

- CI/CD implementation

- Setting up Local development environment

Finally, we’ll look at the pros, cons and potential improvements to the architecture.

Stay tuned for the updates!